I am requesting help in putting together an “=ImportHTML” formula for Google Sheets to get the “Earnings Date” for ticker symbol EA (or any other valid ticker symbol) from CNBC (https://www.cnbc.com/quotes/?symbol=EA&qsearchterm=). In this case, I am trying to import the date “2020-07-30.”

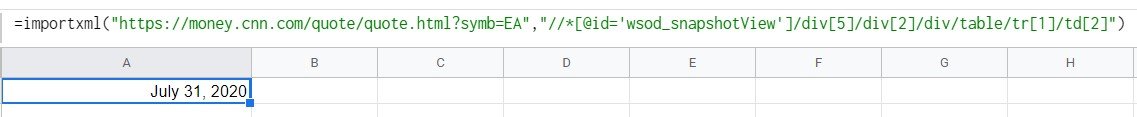

The formula, as I understand it, will be similar to the one below:

=IMPORThtML("http://www.cnbc.com/quotes/?symbol=ea&qsearchterm="& A4,"//table/tbody[1]/tr[3]/td[1]")

My time and effort has yet to yield results. Suggestions will be greatly appreciated.

The formula, as I understand it, will be similar to the one below:

=IMPORThtML("http://www.cnbc.com/quotes/?symbol=ea&qsearchterm="& A4,"//table/tbody[1]/tr[3]/td[1]")

My time and effort has yet to yield results. Suggestions will be greatly appreciated.