Chris7

Recycles dryer sheets

This "guy" spent two extremely long blog posts on artificial intelligence.

The Artificial Intelligence Revolution: Part 1 - Wait But Why

The Artificial Intelligence Revolution: Part 2 - Wait But Why

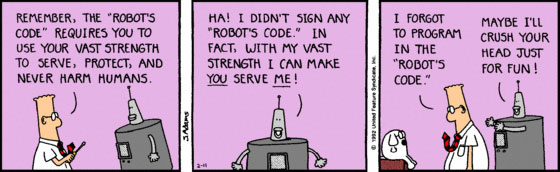

I do think more people need to be concerned about this and I'm not really sure we as a race should actually be hoping it comes about, but seeing as how you don't want to be the last one to the party, I can see why people would be working on it feverishly.

I guess, I just don't have enough faith in the human race to believe that it will end well though.

Has anyone here put some serious thought into the potential coming AI revolution?

cd :O)

The Artificial Intelligence Revolution: Part 1 - Wait But Why

The Artificial Intelligence Revolution: Part 2 - Wait But Why

I do think more people need to be concerned about this and I'm not really sure we as a race should actually be hoping it comes about, but seeing as how you don't want to be the last one to the party, I can see why people would be working on it feverishly.

I guess, I just don't have enough faith in the human race to believe that it will end well though.

Has anyone here put some serious thought into the potential coming AI revolution?

cd :O)