Elon would say auto pilot is not their SDC software.

And that you still don't need lidars.

Fine. A lot of people are waiting with bated breath to see him deliver SDC in 2020 as he claimed to be able to do. Lidars make the job a bit easier, and if he can do without it, all the more power to him. It's the results that count.

But if I were him, if I knew some weakness in my system, I would test them to make sure I fixed up the software. It is not that hard to put a semitrailer across the car path and to see if my new software will not drive the car under it. I would do that with different lighting conditions. I would make sure my computer now recognizes a parked red fire truck on the car path, etc...

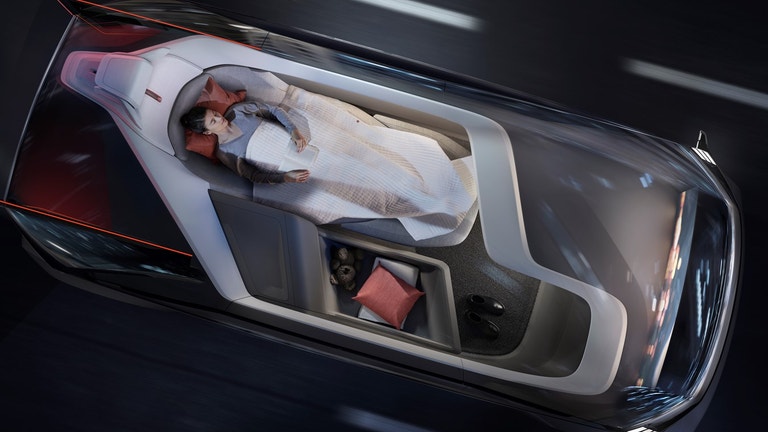

It does not matter when I could blame the drivers for not paying attention, but when I now tell them they can trust the system and go to sleep or look down to do texting on the phone, I would want to be sure that these known problems are fixed. No? Some people here say it does not matter, because the system does not have to be perfect. Huh?

Here's the tautology as I see it (maybe I misread something).

Statement A - Human drivers are imperfect.

Statement B - Therefore, an SDC does not have to be perfect to save lives.

Statement C - Brand A autopilot is not perfect, therefore it can still save lives.

A and B are true. But C is totally baloney.

The problem is while perfection can never be achieved in this life, certain minimal qualifications will be needed. Right now, nobody knows how to set the testing requirements. But I look at some of the egregious accidents, and I say that it is not going to work, unless they fix the known problems.

There are 33,654 fatal traffic accidents in the US in 2018. That's 92 fatal accidents a day.

How many semi-trailers in the US? 2 million. How many cars in the US? 272 million cars.

Now the tougher question: How many semi-trailer/car encounters on the road each day? How many million encounters?

I would say you have to do something to be sure you run a car under a trailer less than 92 times a day. Don't you want to test it out once or twice on a test track?

And that's just for "trailer avoidance". And then, you have to worry about running into red fire trucks, which you have shown capable of doing. And then construction barriers. And then who knows what else.

You've got to do some tests to know how "imperfect" your system is.

PS. Let's say each of the 2 million trailers encounters a car only once a day (ridiculously infrequent, I know). If I want to keep my cars from running under the trailers no more than 92 times each day, that's one time for every 22,000 encounters. How many tests do I need to make to be sure?

If I am able to do only 100 tests, then I want to be damn sure that the test results are "flawless". If you already fail 1 out of 100 times, you are not going to have less than 92 accidents each day with millions of vehicles. And that's just one thing where you can kill people.